“Smart” dresser prototype guides people with dementia in getting dressed

May 01, 2018

DRESS Holds Promise for Alleviating Caregiver Burden, Creating Independence Among People with Cognitive Disorders

A new study published in JMIR Medical Informatics describes how a “smart home” prototype may help people with dementia dress themselves through automated assistance, enabling them to maintain independence and dignity and providing their caregivers with a much-needed respite.

People with dementia or other cognitive disorders have difficulty with everyday activities – such as bathing, dressing, eating, and cleaning – which in turn makes them increasingly dependent on caregivers. Dressing is one of the most common and stressful activities for both people with dementia and their caregivers because of the complexity of the task and lack of privacy. Research shows that adult children find it particularly challenging to help dress their parents, especially for opposite genders.

Researchers at NYU Rory Meyers College of Nursing, Arizona State University, and MGH Institute of Health Professions are applying “smart home” concepts to use technology to address the challenges of dressing people with dementia. Using input from caregiver focus groups, they developed an intelligent dressing system named DRESS, which integrates automated tracking and recognition with guided assistance with the goal of helping a person with dementia get dressed without a caregiver in the room.

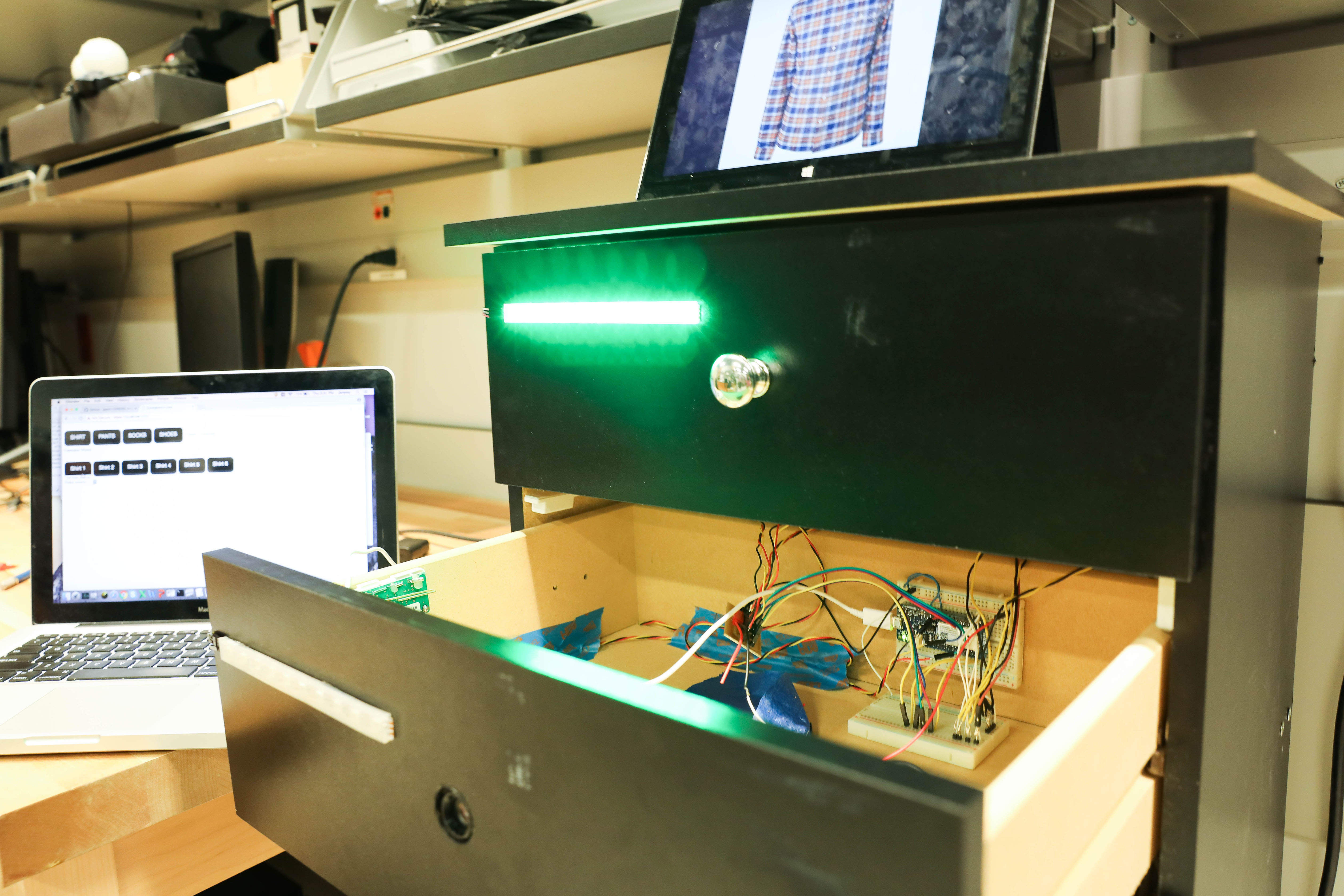

The DRESS prototype uses a combination of sensors and image recognition to track progress during the dressing process using barcodes on clothing to identify the type, location, and orientation of a piece of clothing. A five-drawer dresser – topped with a tablet, camera, and motion sensor – is organized with one piece of clothing per drawer in an order that follows an individual’s dressing preferences. A skin conductance sensor worn as a bracelet monitors a person’s stress levels and related frustration.

The caregiver initiates the DRESS system (and then monitors progress) from an app. The person with dementia receives an audio prompt recorded in the caregiver’s voice to open the top drawer, which simultaneously lights up. The clothing in the drawers contains barcodes that are detected by the camera. If an item of clothing is put on correctly, the DRESS system prompts the person to move to the next step; if it detects an error or lack of activity, audio prompts are used for correction and encouragement. If it detects ongoing issues or an increase in stress levels, the system can alert a caregiver that help is needed.

“Our goal is to provide assistance for people with dementia to help them age in place more gracefully, while ideally giving the caregiver a break as the person dresses – with the assurance that the system will alert them when the dressing process is completed or prompt them if intervention is needed,” said Winslow Burleson, PhD, associate professor at NYU Rory Meyers College of Nursing, director of the NYU-X Lab, and the study’s lead author.

“The intent of the DRESS prototype is to integrate typical routines and humanized interactions, promote normalcy and safety, and allow for customization to guide people with dementia through the dressing process.”

In preparation for in-home studies, the study published in JMIR Medical Informatics tested the ability of the DRESS prototype to accurately detect proper dressing. Eleven healthy participants simulated common scenarios for getting dressed, ranging from normal dressing to donning a shirt inside out or backwards or partial dressing – typical issues that challenge a person with dementia and their caregivers.

The study showed that the DRESS prototype could detect clothing orientation and position as well as infer one’s current state of dressing using its combination of sensors and software. In initial phases of donning either shirts or pants, the DRESS prototype accurately detected participants’ clothing 384 of 388 times. However, the prototype was not able to consistently identify when one completed putting on an item of clothing, missing these final cues in 10 of 22 cases for shirts and 5 of 22 cases for pants.

Based on their findings, the researchers saw opportunities to improve the prototype’s reliability, including increasing the size of the barcodes, minimizing the folding of garments to prevent barcodes from being blocked, and optimal positioning of participants with respect to the DRESS prototype.

“With improvements identified by this study, the DRESS prototype has the potential to provide automated dressing support to assist people with dementia in maintaining their independence and privacy, while alleviating the burden on caregivers,” said Burleson, who is also affiliated with NYU’s Tandon School of Engineering, Steinhardt School, Courant Institute, and College of Global Public Health.

In addition to Burleson, study authors include Diane Mahoney of MGH Institute of Health Professions and Cecil Lozano, Vijay Ravishankar, and Jisoo Lee of Arizona State University. The research was supported by the National Institute of Nursing Research of the National Institutes of Health (R21NR013471).